API Key Misconfiguration — 401 Auth Outage

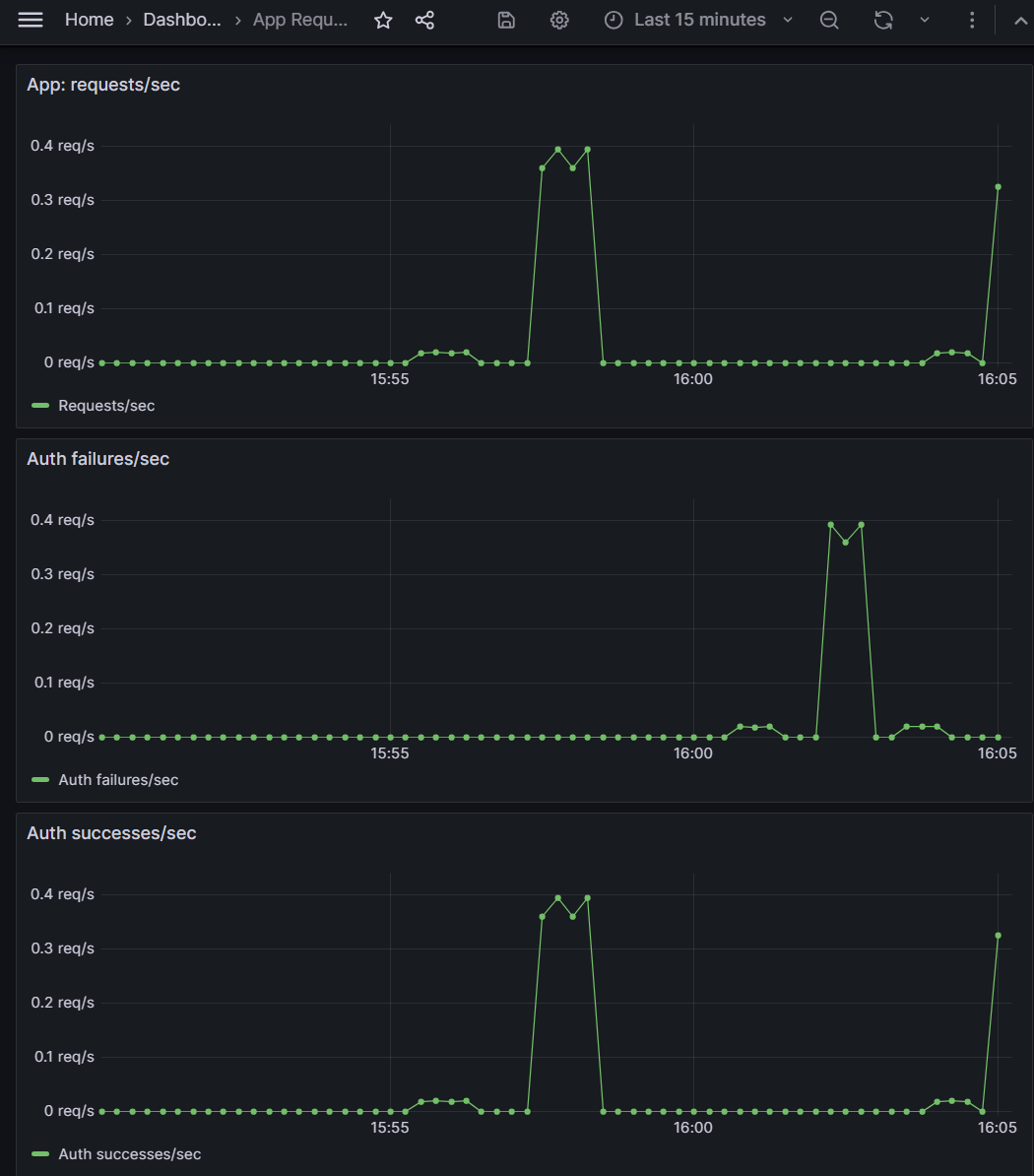

← Back to ASE ProjectsEnd-to-end incident response for a very common ticket: "everything is 401 now". I added simple API key auth to a Flask API behind Nginx, instrumented Prometheus metrics for requests and auth outcomes, then broke the API key on purpose. Grafana showed auth failures spiking while requests/sec stayed flat. I then realigned the configuration, restored 200s, and used metrics + dashboards to verify recovery.

Stack

Docker Compose • Nginx • Flask • Postgres • Prometheus • Grafana • Linux

What I Did

- Added header-based auth using

X-API-Keyand instrumented metrics:app_requests_total,app_auth_success_total,app_auth_failures_total. - Built Grafana panels for requests/sec, auth failures/sec, and auth successes/sec.

- Baseline: valid key (

apikey-v2) → 200s on/api/users, successes increment, failures stay 0. - Broke auth by rotating the app's expected key without updating the caller → every request 401 Unauthorized.

- Used

/metrics+ Grafana to see failures spike, then fixed the config and confirmed that successes resumed and failures flattened.

Incident Timeline

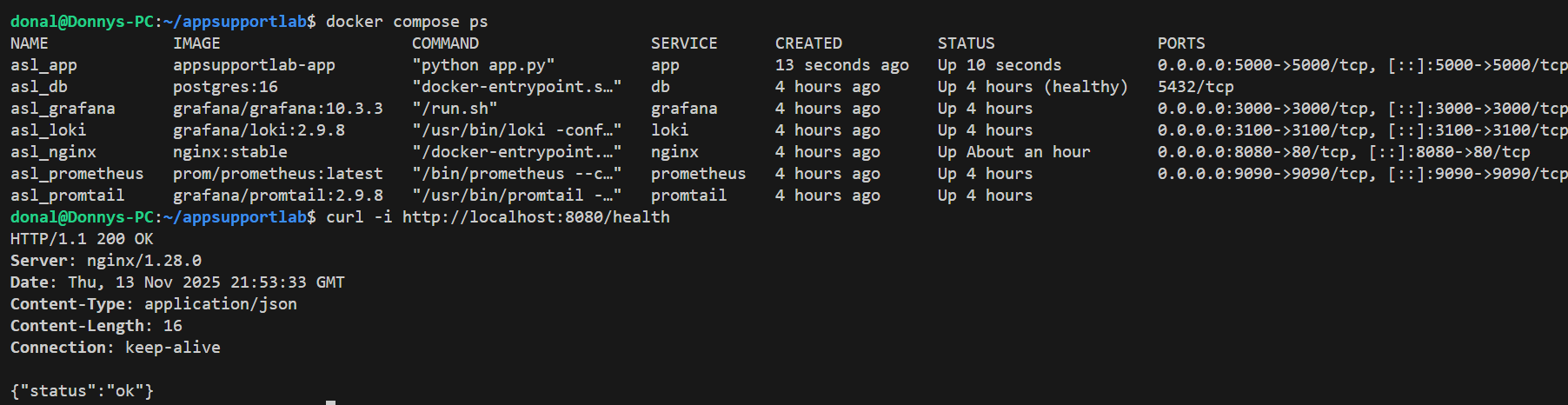

- Baseline: all services healthy, 200 on /api/users.

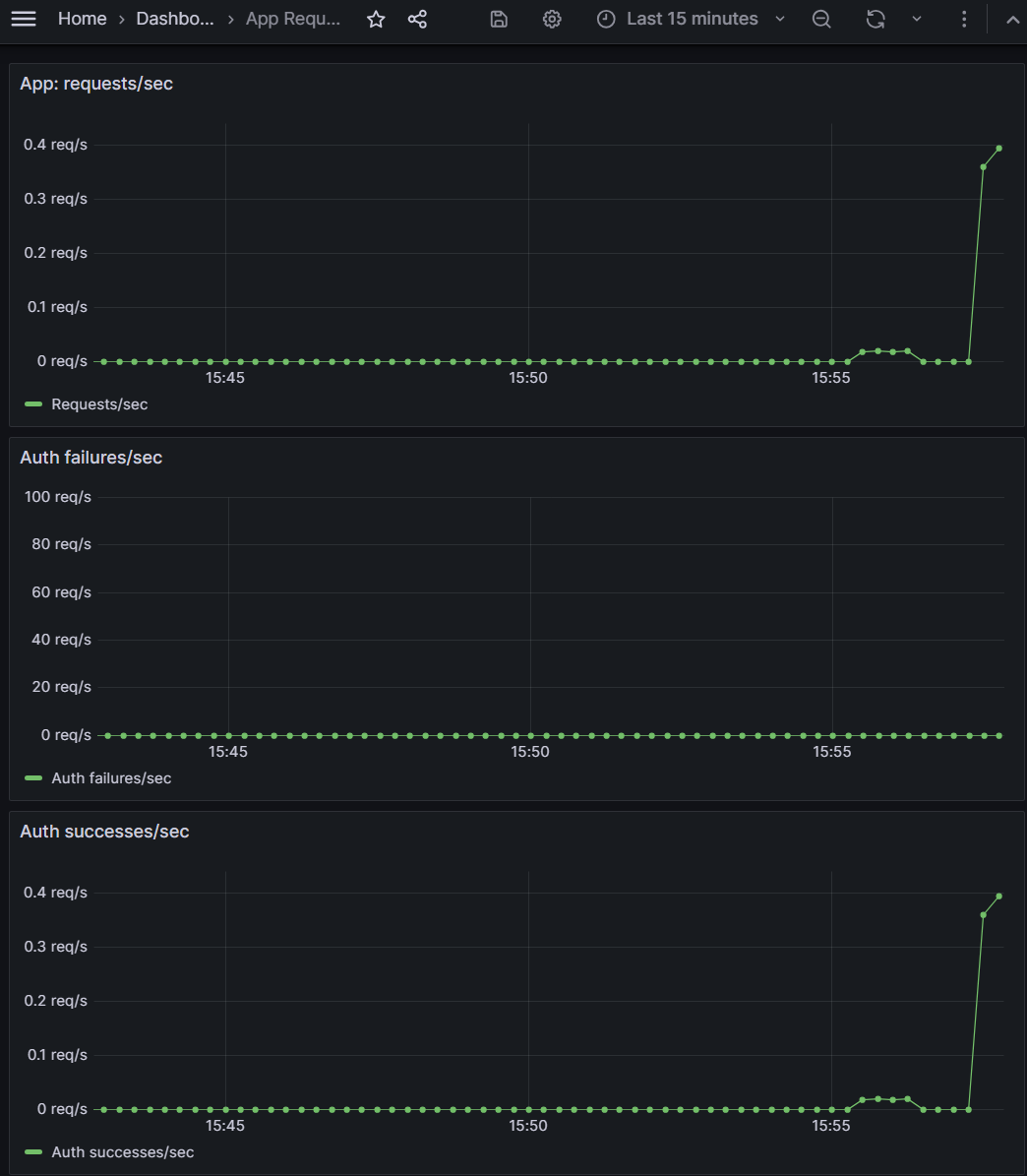

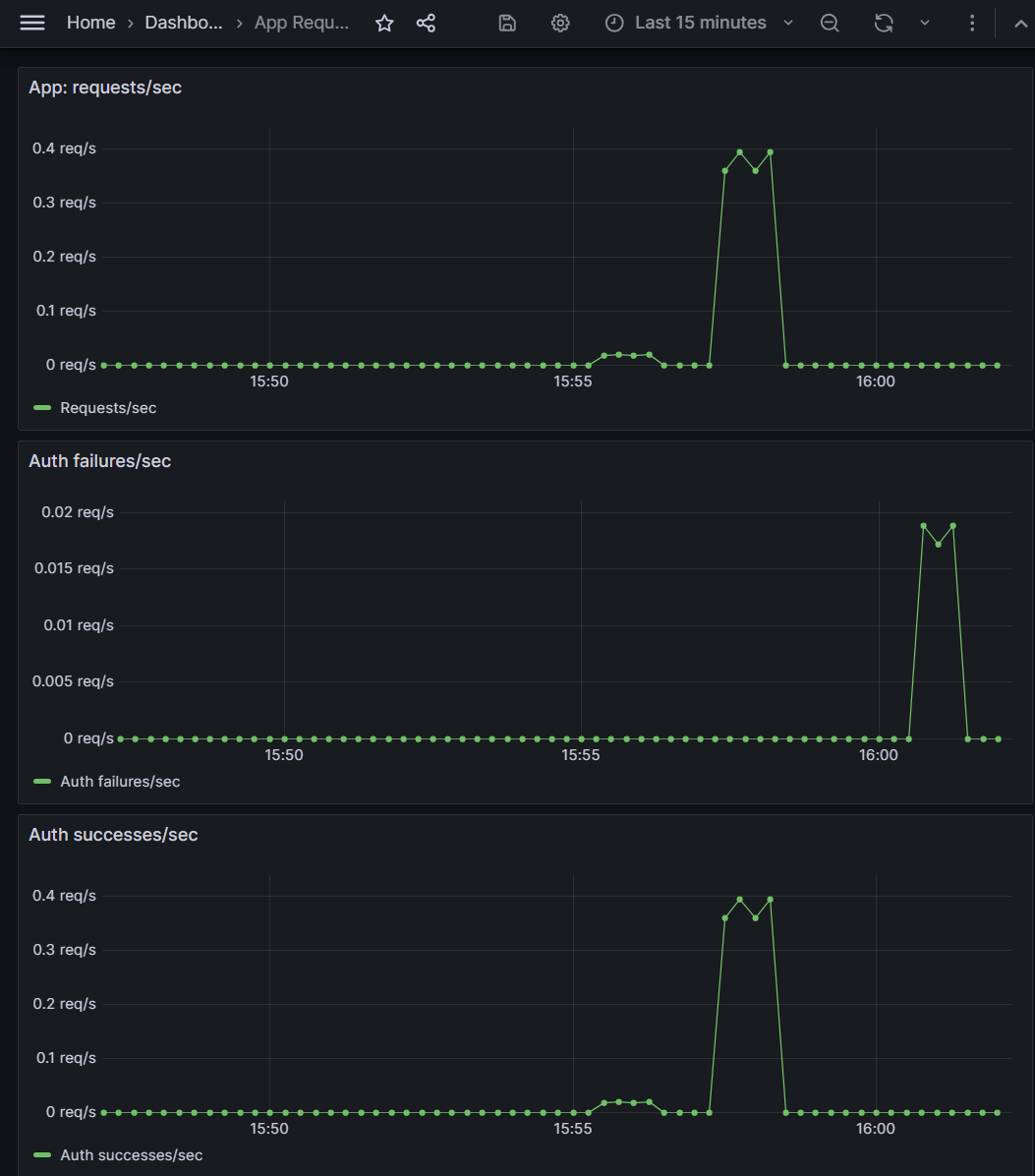

- Grafana shows requests/sec & auth successes/sec, 0 fails.

- Rotate expected API key in

docker-compose.yml→ caller still sends old key. - Requests flip to 401; auth failures/sec spikes while requests/sec stays steady.

- Align keys, redeploy app, validate 200s and stable auth metrics.

Incident Response Story

1) Baseline & Auth Instrumentation

First I confirmed the lab stack was healthy and that auth behaved correctly with a known-good key. Requests to /api/users with apikey-v2 returned 200 OK, /health was green, and the /metrics endpoint showed auth successes increasing with failures still at 0. This gave me a clean baseline for both the API and the observability layer.

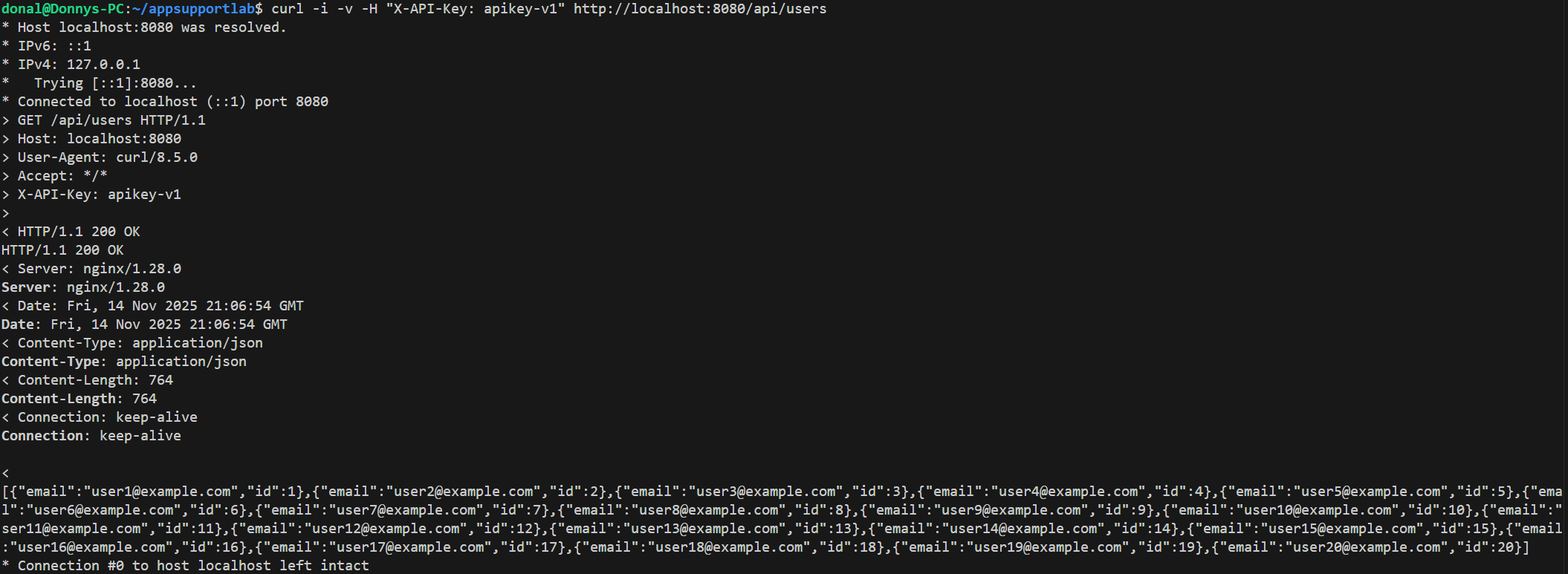

docker compose ps, and curl -i -H "X-API-Key: apikey-v1" against /api/users returns 200 OK.

/api/users with X-API-Key: apikey-v1 returns 200 OK and the JSON list of users. This proves the original key works end-to-end before we rotate anything.

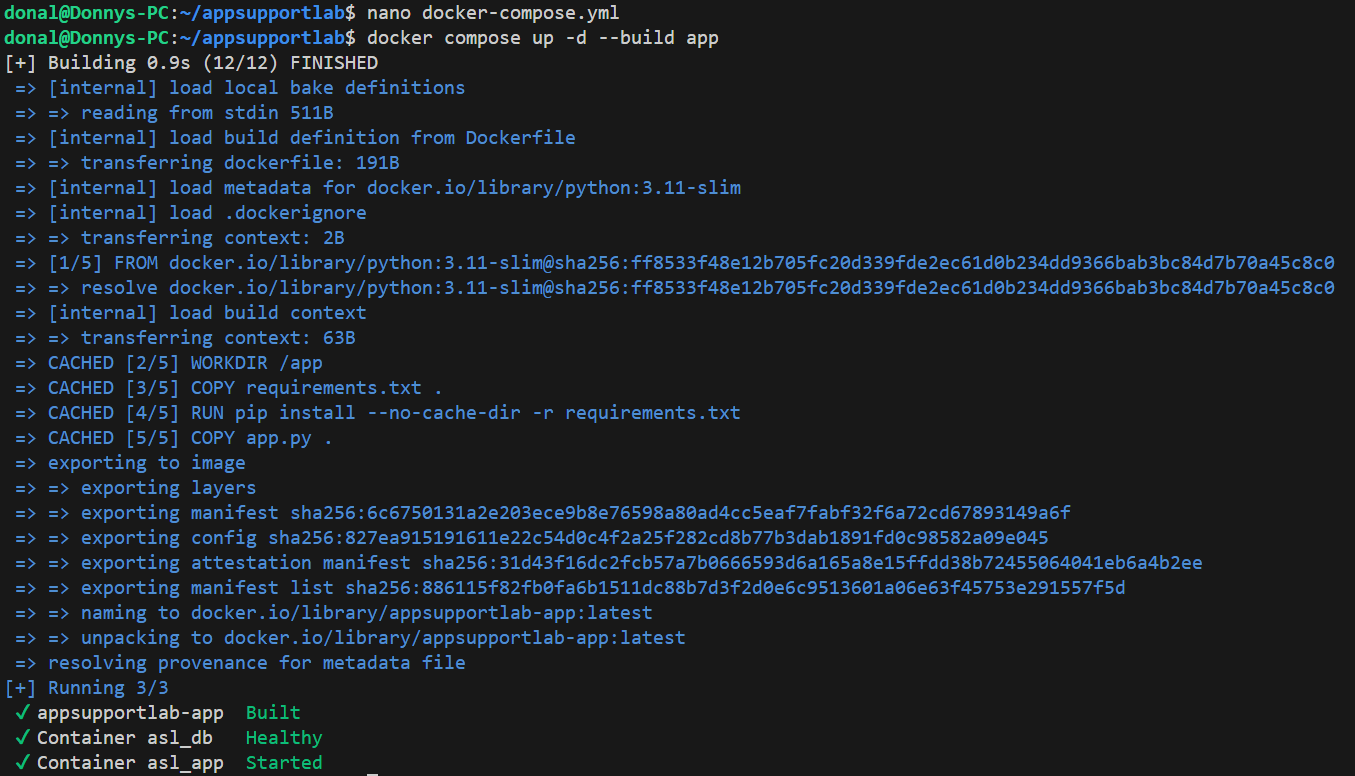

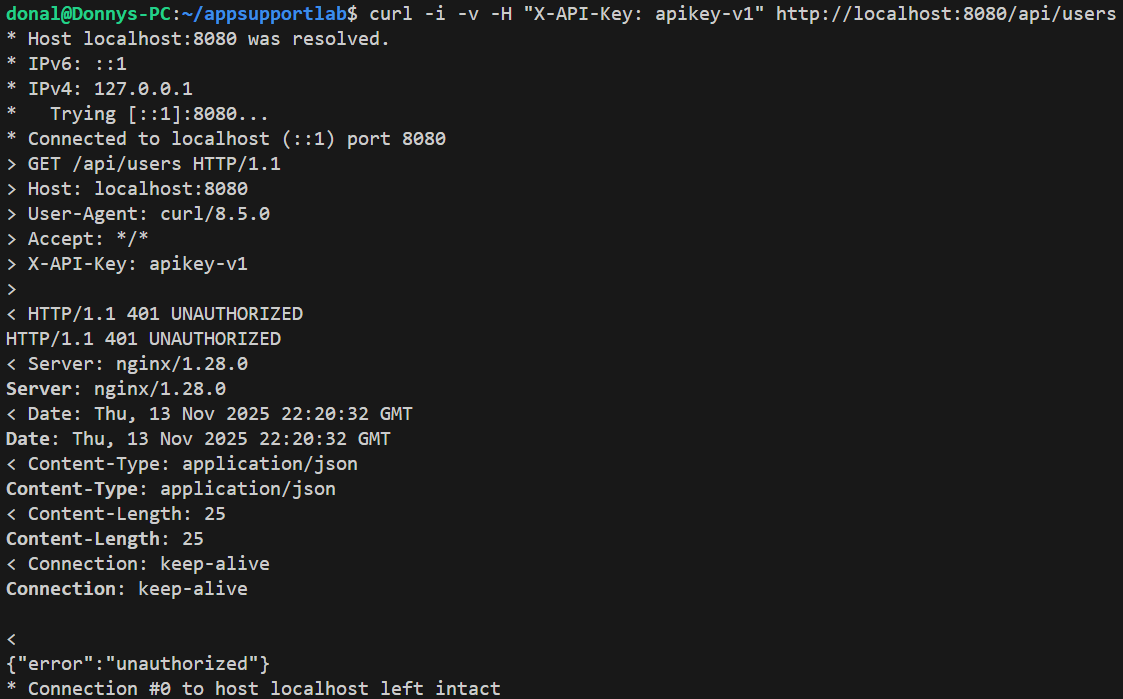

2) Introduce Auth Misconfiguration → 401s

To simulate a real-world auth break, I rotated the app's expected API key in docker-compose.yml to a new value (for example apikey-v2) and rebuilt only the app container. The caller still used the original apikey-v1 header, so the app started rejecting every request with 401 Unauthorized.

APP_API_KEY is changed in docker-compose.yml, then docker compose up -d --build app rolls the change into the running container.

curl -i -v -H "X-API-Key: apikey-v1" call now gets HTTP/1.1 401 Unauthorized with {"error":"unauthorized"}. Classic "everything is 401 now" behavior.3) Observe the Failure Pattern in Metrics

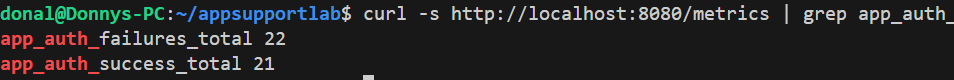

With the misconfig live, I drove steady traffic using a curl loop that kept sending the old key. In Grafana,requests/sec stayed flat (clients kept calling), but auth failures/sec spiked while successes/sec dropped to zero. At the same time, the counters scraped from /metrics showed failures overtaking successes.

/metrics: app_auth_failures_total has jumped ahead of app_auth_success_total, confirming what Grafana shows and giving a simple CLI view of the same story.4) Recovery & Verification

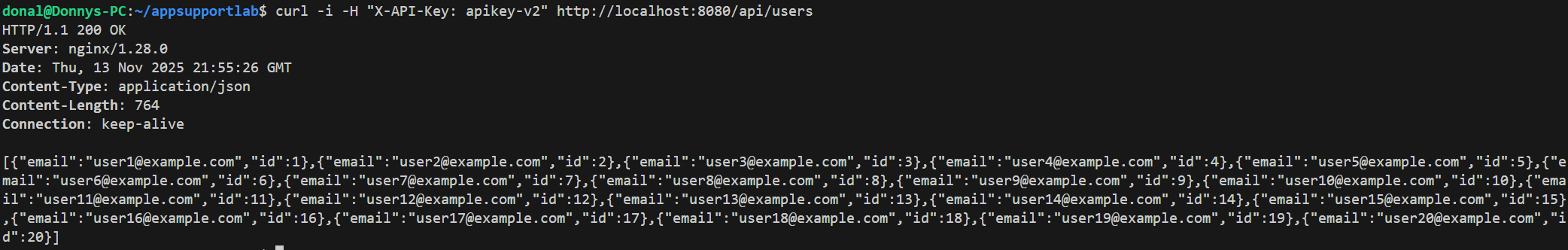

To fix the outage, I aligned the caller and server to a new shared key (apikey-v2), redeployed the app container, and re-ran the same traffic pattern. /api/users returned 200 again, app_auth_failures_total stopped increasing, and Grafana showed successes/sec back on top with failures/sec at zero.

apikey-v2 in the header, /api/users is back to HTTP/1.1 200 OK and returns the full JSON user list.

Key Commands Used

Repro & Evidence

# Baseline: valid key (apikey-v1)

curl -i -H "X-API-Key: apikey-v1" http://localhost:8080/api/users

curl -s http://localhost:8080/metrics | grep app_auth_

# Introduce auth outage (rotate app key only)

# 1) Edit docker-compose.yml -> APP_API_KEY=apikey-v2

# 2) Redeploy app container:

docker compose up -d --build app

# Drive traffic that will now fail auth

for i in {1..40}; do

curl -s -o /dev/null -w "%{http_code}

" -H "X-API-Key: apikey-v1" http://localhost:8080/api/users

sleep 0.3

done

# Check metrics from the CLI

curl -s http://localhost:8080/metrics | grep app_auth_Grafana Panels & Recovery

# PromQL for Grafana panels # App: requests/sec rate(app_requests_total[1m]) # Auth failures/sec rate(app_auth_failures_total[1m]) # Auth successes/sec rate(app_auth_success_total[1m]) # Fix: align keys and redeploy # 1) Edit docker-compose.yml -> APP_API_KEY=apikey-v2 docker compose up -d --build app # 2) Call API with the new key curl -i -H "X-API-Key: apikey-v2" http://localhost:8080/api/users # 3) Confirm counters and graphs look healthy curl -s http://localhost:8080/metrics | grep app_auth_

Outcome & Prevention

- Localized the outage to an API key mismatch between caller and app; traffic still reached the service but failed auth.

- Built a small, reusable dashboard for auth health: requests/sec vs auth successes/sec vs auth failures/sec.

- Captured a simple rotation runbook: update secret → redeploy → test with new key → confirm metrics (no new failures).

- This pattern generalizes to many real SaaS tickets: wrong API key, expired token, missing scopes, or misconfigured client secrets.